The Evolution of Open-Source Intelligence in Enterprise System Architecture

The release of GLM-5 marks a definitive pivot in the trajectory of artificial intelligence, moving the industry beyond the era of mere code snippet generation. This transition represents a fundamental shift from “vibe coding” to true agentic engineering, where models are tasked with constructing complete, functioning systems rather than isolated components. By making this capability open source, GLM-5 challenges the dominance of proprietary models in the high-stakes arena of autonomous software development.

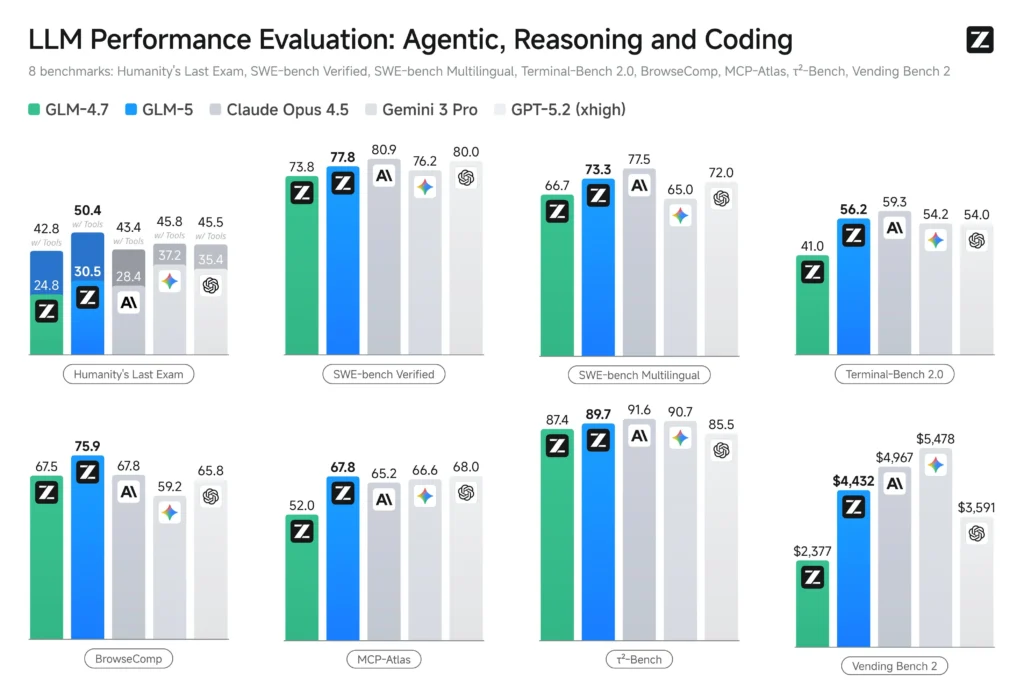

In practical environments, this model demonstrates performance metrics that approach the sophisticated capabilities of Claude Opus 4.5. It excels specifically in complex system design and tasks requiring sustained, long-horizon planning rather than momentary outputs. As organizations seek to deploy AI that can manage end-to-end workflows, GLM-5 offers a robust, accessible alternative to existing closed-system solutions.

Key Insights at a Glance

- Massive Scale Expansion: Parameter count has increased to 744bn with 28.5trn training tokens, significantly boosting reasoning capabilities.

- Agentic Engineering Focus: The model is optimized for autonomous task execution, moving beyond simple code generation to complex system building.

- Architectural Efficiency: New frameworks like Slime and DeepSeek Sparse Attention enhance continuous learning and deployment costs.

- Benchmark Dominance: GLM-5 outperforms Gemini 3 Pro in specific software engineering tasks, setting new standards for open-source models.

- Operational Management: Superior performance in economic simulations proves the model’s ability to manage resources and long-term goals.

The Limits of Generative Coding

For years, the utility of large language models in software development was restricted to assisting with syntax or prototyping user interfaces. While valuable, these “vibe coding” capabilities often failed when applied to rigorous, multi-step engineering problems. Yet, as demands for autonomy rise, is raw generation capability enough to sustain complex, multi-step workflows? The industry requires models that can maintain coherent goals across long horizons rather than simply predicting the next token in a sequence.

Addressing the Complexity Crisis

The agitation in the market stems from the inability of smaller or less optimized models to handle the “context drift” that occurs during extended tasks. When an AI attempts to build a system, it must remember initial constraints while executing intermediate steps. Traditional architectures often struggle here, leading to disjointed code that fails to compile or function as a unified whole. To bridge this gap, the underlying infrastructure of the model must support sustained reasoning without incurring prohibitive computational costs.

Architectural Innovation Driving Agentic Success

GLM-5 addresses these structural deficits through a massive expansion in scale paired with novel training methodologies. The model’s architecture has grown from 355bn to 744bn parameters, with active parameters rising to 40bn, supported by a training corpus of 28.5trn tokens. However, raw size is refined by the introduction of the Slime framework, which enables asynchronous reinforcement learning at scale. This allows the model to learn continuously from extended interactions, improving its post-training efficiency in ways previous iterations could not.

Furthermore, the integration of DeepSeek Sparse Attention is a critical development for enterprise deployment. This mechanism maintains high performance over long contexts while simultaneously cutting deployment costs and improving token efficiency. By optimizing how the model attends to information, GLM-5 ensures that long-horizon planning does not degrade into incoherence or become economically unviable for users.

Validating Autonomous Capabilities

The theoretical advances of GLM-5 are substantiated by rigorous benchmarking results that highlight its proficiency in agentic engineering. On SWE-bench-Verified, the model achieved a score of 77.8, and on Terminal Bench 2.0, it reached 56.2—the highest reported results for open-source models. These figures indicate a capability that surpasses established competitors like Gemini 3 Pro in several distinct software engineering tasks.

Perhaps most telling is the model’s performance on Vending Bench 2, a simulation requiring the operation of a vending machine business over a full year. GLM-5 finished with a balance of $4,432, leading other open-source models in operational and economic management. In this simulation, the model functions less like a simple code generator and more like a strategic business manager, effectively balancing resource allocation and operational logistics over time.

Future Outlook

The launch of GLM-5 signals that the frontier of artificial intelligence is decisively shifting from writing isolated lines of code to delivering functioning, end-to-end systems. By combining massive scale with efficient, agentic-focused architecture, the model demonstrates the qualities required for coordinating multi-step processes and managing resources autonomously. As these capabilities mature, the role of AI will evolve from a passive assistant to an active engineer, capable of owning complex development cycles independently.